The new AI frontier in investment portfolio

Backgound

I would like to introduce some papers bridging deep learning and traditional

financial theories (especially in the field of investments),

hoping that the tecniques employed in them will be used as components

in developing new investment and risk management systems.

Content

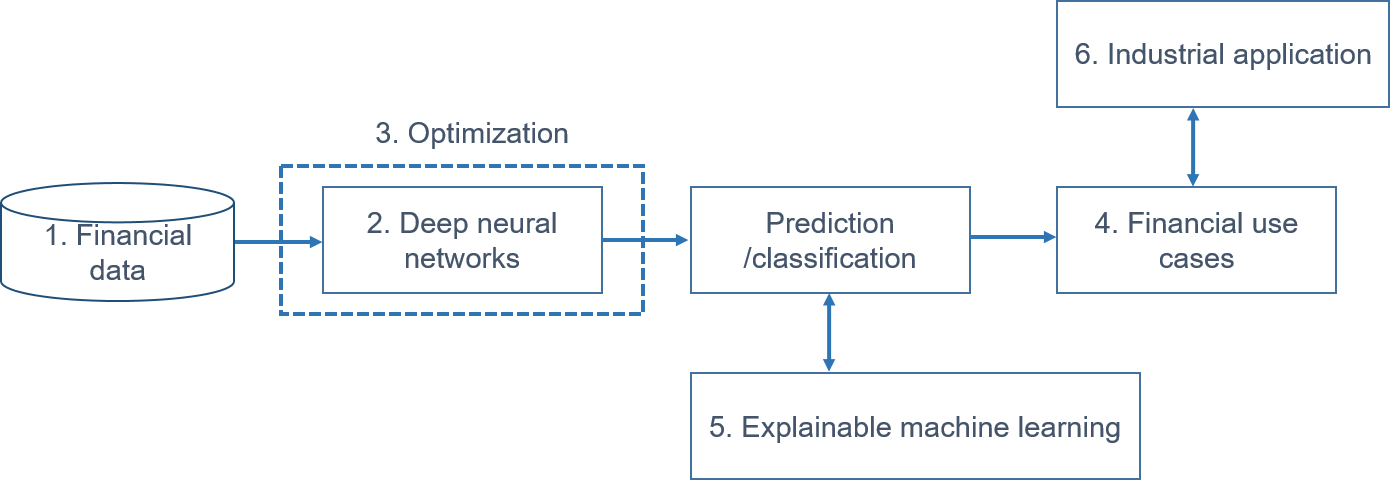

The content consists of six stages:

1. data, 2. deep neural networks, 3. optimization, 4.financial use case,

5. explainable machine learning, and 6. industrial application.

1. Financial data

Technical indicator

-

Forecasting price movements using technical indicators: Investigating the impact of varying input window length(2017), Y. Shynkevich et al. [pdf]

Macroecnomic indicators

-

An Algorithmic Crystal Ball: Forecasts-based on Machine Learning(2018), J.-K. Jung et al. [pdf]

Fundamental indicators

-

Deep Learning for Predicting Asset Returns(2018), G. Feng et al. [pdf]

- Focus: It finds the existence of nonlinear factors which explain predictability of returns, in particular at the extremes of the characteristic space.

Multi-indicators

-

Discovering Bayesian Market Views for Intelligent Asset Allocation(2018), F. Z. Xing et al. [pdf]

- Focus: It proposes to formalize public mood into market views, because market views can be integrated into the modern portfolio theory. In the framework, the optimal market views will maximize returns in each period with a Bayesian asset allocation model. They use the data of capitalization, prices, volume, and sentiment and train two neural models to generate the market views, and benchmark the model performance on other popular asset allocation strategies.

-

Visual Attention Model for Cross-sectional Stock Return Prediction and End-to-End Multimodal Market Representation Learning(2018), R. Zhao et al. [pdf]

- Focus: Using the data of price-volume, historical return, technical indicators, and fundamental indicators, it applies a convolutional neural network over this market image to build market features in a hierarchical way. We use a recurrent neural network, with an attention mechanism over the market feature maps, to model temporal dynamics in the market.

2. Deep neural networks

2.1 Multi-layer perceptron

2.2 Recurrent neural networks

-

Deep learning with long short-term memory networks for financial market predictions(2018), T. Fischer and C. Krauss. [pdf]

-

Modeling Long- and Short-Term Temporal Patterns with Deep Neural Networks(2018), Guokun Lai, Wei-Cheng Chang, Yiming Yang, Hanxiao Liu. [pdf]

- Focus: Multivariate time series forecasting is an important machine learning problem across many domains, including predictions of solar plant energy output, electricity consumption, and traffic jam situation. Temporal data arise in these real-world applications often involves a mixture of long-term and short-term patterns, for which traditional approaches such as Autoregressive models and Gaussian Process may fail. In this paper, we proposed a novel deep learning framework, namely Long- and Short-term Time-series network (LSTNet), to address this open challenge. LSTNet uses the Convolution Neural Network (CNN) and the Recurrent Neural Network (RNN) to extract short-term local dependency patterns among variables and to discover long-term patterns for time series trends. Furthermore, we leverage traditional autoregressive model to tackle the scale insensitive problem of the neural network model. In our evaluation on real-world data with complex mixtures of repetitive patterns, LSTNet achieved significant performance improvements over that of several state-of-the-art baseline methods. All the data and experiment codes are available online.

-

AdaRNN: Adaptive Learning and Forecasting of Time Series(2021), Yuntao Du, Jindong Wang, Wenjie Feng, Sinno Pan, Tao Qin, Renjun Xu, Chongjun Wang. [pdf]

- Focus: Time series has wide applications in the real world and is known to be difficult to forecast. Since its statistical properties change over time, its distribution also changes temporally, which will cause severe distribution shift problem to existing methods. However, it remains unexplored to model the time series in the distribution perspective. In this paper, we term this as Temporal Covariate Shift (TCS). This paper proposes Adaptive RNNs (AdaRNN) to tackle the TCS problem by building an adaptive model that generalizes well on the unseen test data. AdaRNN is sequentially composed of two novel algorithms. First, we propose Temporal Distribution Characterization to better characterize the distribution information in the TS. Second, we propose Temporal Distribution Matching to reduce the distribution mismatch in TS to learn the adaptive TS model. AdaRNN is a general framework with flexible distribution distances integrated. Experiments on human activity recognition, air quality prediction, and financial analysis show that AdaRNN outperforms the latest methods by a classification accuracy of 2.6% and significantly reduces the RMSE by 9.0%. We also show that the temporal distribution matching algorithm can be extended in Transformer structure to boost its performance.

2.3 Convolutional neural networks

-

Algorithmic financial trading with deep convolutional neuralnetworks: Time series to image conversion approach(2018), O. B. Sezer and A. M. Ozbayoglu. [pdf]

- Focus: It proposes a novel algorithmic trading model CNN-TA using a 2-D convolutional neural network based on image processing properties. In order to convert financial time series into 2-D images, 15 different technical indicators each with different parameter selections are utilized.

2.4 Autoencoder

2.5 Generative adverarial networks

-

C-RNN-GAN: Continuous recurrent neural networks with adversarial training(2016), Olof Mogren. [pdf]

- Focus: Generative adversarial networks have been proposed as a way of efficiently training deep generative neural networks. It proposes a generative adversarial model that works on continuous sequential data, and apply it by training it on a collection of classical music. It concludes that it generates music that sounds better and better as the model is trained, report statistics on generated music, and let the reader judge the quality by downloading the generated songs.

-

Time-series Generative Adversarial Networks(2019), Jinsung Yoon, Daniel Jarrett, Mihaela van der Schaar. [pdf]

- Focus: It proposes a novel framework for generating realistic time-series data that combines the flexibility of the unsupervised paradigm with the control afforded by supervised training. Through a learned embedding space jointly optimized with both supervised and adversarial objectives, it encourages the network to adhere to the dynamics of the training data during sampling.

-

Quant GANs: Deep Generation of Financial Time Series(2019), Magnus Wiese, Robert Knobloch, Ralf Korn, Peter Kretschmer. [pdf]

- Focus: Modeling financial time series by stochastic processes is a challenging task and a central area of research in financial mathematics. As an alternative, it introduces Quant GANs, a data-driven model which is inspired by the recent success of generative adversarial networks (GANs). Quant GANs consist of a generator and discriminator function, which utilize temporal convolutional networks (TCNs) and thereby achieve to capture long-range dependencies such as the presence of volatility clusters. The generator function is explicitly constructed such that the induced stochastic process allows a transition to its risk-neutral distribution. Our numerical results highlight that distributional properties for small and large lags are in an excellent agreement and dependence properties such as volatility clusters, leverage effects, and serial autocorrelations can be generated by the generator function of Quant GANs, demonstrably in high fidelity.

-

Conditional Sig-Wasserstein GANs for Time Series Generation(2020), H. Ni, L. Szpruch, M. Wiese, S. Liao, and B. Xiao. [pdf]

- Focus: Generative adversarial networks (GANs) struggle to capture the temporal dependence of joint probability distributions induced by time-series data. To overcome these challenges, It integrate GANs with mathematically principled and efficient path feature extraction called the signature of a path. The signature of a path is a graded sequence of statistics that provides a universal description for a stream of data, and its expected value characterises the law of the time-series model.

-

Decision-Aware Conditional GANs for Time Series Data(2020), He Sun, Zhun Deng, Hui Chen, David C. Parkes. [pdf]

- Focus: It introduces the decision-aware time-series conditional generative adversarial network (DAT-CGAN) as a method for time-series generation. The framework adopts a multi-Wasserstein loss on structured decision-related quantities, capturing the heterogeneity of decision-related data and providing new effectiveness in supporting the decision processes of end users. It improves sample efficiency through an overlapped block-sampling method, and provides a theoretical characterization of the generalization properties of DAT-CGAN. The framework is demonstrated on financial time series for a multi-time-step portfolio choice problem. We demonstrate better generative quality in regard to underlying data and different decision-related quantities than strong, GAN-based baselines.

-

TadGAN: Time Series Anomaly Detection Using Generative Adversarial Networks(2020), Alexander Geiger, Dongyu Liu, Sarah Alnegheimish, Alfredo Cuesta-Infante, Kalyan Veeramachaneni [pdf]

- Focus: Time series anomalies can offer information relevant to critical situations facing various fields, from finance and aerospace to the IT, security, and medical domains. However, detecting anomalies in time series data is particularly challenging due to the vague definition of anomalies and said data's frequent lack of labels and highly complex temporal correlations. Current state-of-the-art unsupervised machine learning methods for anomaly detection suffer from scalability and portability issues, and may have high false positive rates. In this paper, we propose TadGAN, an unsupervised anomaly detection approach built on Generative Adversarial Networks (GANs). To capture the temporal correlations of time series distributions, we use LSTM Recurrent Neural Networks as base models for Generators and Critics. TadGAN is trained with cycle consistency loss to allow for effective time-series data reconstruction. We further propose several novel methods to compute reconstruction errors, as well as different approaches to combine reconstruction errors and Critic outputs to compute anomaly scores. To demonstrate the performance and generalizability of our approach, we test several anomaly scoring techniques and report the best-suited one. We compare our approach to 8 baseline anomaly detection methods on 11 datasets from multiple reputable sources such as NASA, Yahoo, Numenta, Amazon, and Twitter. The results show that our approach can effectively detect anomalies and outperform baseline methods in most cases (6 out of 11). Notably, our method has the highest averaged F1 score across all the datasets. Our code is open source and is available as a benchmarking tool.

3. Optimization

4. Financial use cases

4.1. Prediction: up/down, trend

4.2. Deep trading

4.3. Deep portfolio/deep factor

-

Applying Deep Learning to Enhance Momentum Trading Strategies in Stocks(2013), L. Takeuchi and Y.-Y. Lee. [pdf]

- Focus: It uses an autoencoder composed of stacked restricted Boltzmann machines to extract features from the history of individual stock prices. Its model is able to discover an enhanced version of the momentum effect in stocks without extensive hand-engineering of input features.

-

Deep learning with long short-term memory networks for financial market predictions(2018), T. Fischer and C. Krauss. [pdf]

-

Deep Learning in Asset Pricing(2019), L. Chen et al. [pdf]

-

Deep Factor Model(2018), K. Nakagawa et al. [pdf]

- Focus: It proposes to represent a return model and risk model in a unified manner with deep learning, which is a representative model that can express a nonlinear relationship.

-

Deep Learning in Characteristics-Sorted Factor Models(2019), G. Feng et al. [pdf]

-

Deep Recurrent Factor Model: Interpretable Non-Linear and Time-Varying Multi-Factor Model(2019), K. Nakagawa et al. [pdf]

-

Deep Learning Approximation for Stochastic Control Problems(2016), J. Han and Weinan E. [pdf]

- Focus: It develops a deep learning approach that directly solves high-dimensional stochastic control problems based on Monte-Carlo sampling and test this approach using examples from the areas of optimal trading.

-

Machine learning and the cross-section of expected stock returns(2018), M. Messmer [pdf]

- Focus: Modeling expected cross-sectional stock returns has a long tradition in asset pricing. It is motivated by shortcomings of classical portfolio sorting approaches and tackles the task with alternative methodologies including classical linear models and more advanced machine learning algorithms.

-

Empirical Asset Pricing via Machine Learning(2019), S. Gu et al. [pdf]

- Focus: It performs a comparative analysis of machine learning methods for the canonical problem of empirical asset pricing: measuring asset risk premia. Improved risk premium measurement through machine learning simplifies the investigation into economic mechanisms of asset pricing and highlights the value of machine learning in financial innovation.

-

Machine Learning and Asset Pricing Models (PhD Thesis)(2018), R. A. Porsani. [pdf]

- Focus: It incorporates statistical-learning techniques into the field of cross-sectional asset pricing.

-

Deep Fundamental Factor Models(2019), M. F. Dixon and N. G. Polson [pdf]

- Focus: It develops deep fundamental factor models to interpret and capture non-linearity, interaction effects and non-parametric shocks in financial econometrics, by constructing a six-factor model of assets in the S&P 500 index and generating information ratios that are three times greater than generalized linear regression.

-

Deep Learning for Forecasting Stock Returns in the Cross-Section(2018), Masaya Abe and Hideki Nakayama [pdf]

- Focus: It implements deep learning to predict one-month-ahead stock returns in the cross-section in the Japanese stock market and investigates the performance of the method.

-

Improving Factor-Based Quantitative Investing by Forecasting Company Fundamentals(2018), J. Alberg and Z. C. Lipton [pdf]

- Focus: They first show through simulation that if they could (clairvoyantly) select stocks using factors calculated on future fundamentals (via oracle), then their portfolios would far outperform a standard factor approach.

4.4 Deep Reinforcement

-

Deep Reinforcement Learning in Financial Markets(2019), S. Chakraborty [pdf]

- Focus: It explores the usage of deep reinforcement learning algorithms to automatically generate consistently profitable, robust, uncorrelated trading signals in any general financial market and develops a novel Markov decision process (MDP) model to capture the financial trading markets.

5. Explaining machine learning

-

A Unified Approach to Interpreting Model Predictions(2017), S. M. Lundberg and S.-I. Lee [pdf]

- Focus: It presents a unified framework for interpreting predictions, SHAP (SHapley Additive exPlanations). SHAP assigns each feature an importance value for a particular prediction.

6. Industrial application

-

Artificial IntelligenceJ.P. Morgan. more

-

J.P.Morgan's massive guide to machine learning and big data jobs in finance.J.P. Morgan. more

-

Quantitative investing and the limits of (deep) learning from financial data.J.B. Heaton [pdf]

7. Survey

-

Natural language based financial forecasting: a survey(2018), F. Z. Xing et al. [pdf]

- Focus: It clarifies the scope of natural language based financial forecasting (NLFF) research by ordering and structuring techniques and applications from related work.

-

Surveying stock market forecasting techniques – Part II: Soft computing methods(2009), G. S. Atsalakis et al. [pdf]

- Focus: It surveys more than 100 related published articles that focus on neural and neuro-fuzzy techniques derived and applied to forecast stock markets.

-

Computational Intelligence and Financial Markets: A Survey and Future Directions(2016), R. C. Cavalcante et al. [pdf]

- Focus: It gives an overview of the most important primary studies published from 2009 to 2015, which cover techniques for preprocessing and clustering of financial data, for forecasting future market movements, for mining financial text information, among others.